Pyspark Read Text File

Pyspark Read Text File - Web an array of dictionary like data inside json file, which will throw exception when read into pyspark. Text files, due to its freedom, can contain data in a very convoluted fashion, or might have. The pyspark.sql module is used for working with structured data. Parameters namestr directory to the input data files… Web in this article let’s see some examples with both of these methods using scala and pyspark languages. # write a dataframe into a text file. Bool = true) → pyspark.rdd.rdd [ tuple [ str, str]] [source] ¶. Create rdd using sparkcontext.textfile() using textfile() method we can read a text (.txt) file into rdd. Loads text files and returns a sparkdataframe whose schema starts with a string column named value, and followed by partitioned columns if there are any. Web to make it simple for this pyspark rdd tutorial we are using files from the local system or loading it from the python list to create rdd.

Read all text files from a directory into a single rdd; The pyspark.sql module is used for working with structured data. Web spark sql provides spark.read.text ('file_path') to read from a single text file or a directory of files as spark dataframe. Parameters namestr directory to the input data files… Web apache spark april 2, 2023 spread the love spark provides several read options that help you to read files. Web 1 answer sorted by: Bool = true) → pyspark.rdd.rdd [ tuple [ str, str]] [source] ¶. Web pyspark supports reading a csv file with a pipe, comma, tab, space, or any other delimiter/separator files. Df = spark.createdataframe( [ (a,), (b,), (c,)], schema=[alphabets]). Web to make it simple for this pyspark rdd tutorial we are using files from the local system or loading it from the python list to create rdd.

To read a parquet file. Bool = true) → pyspark.rdd.rdd [ tuple [ str, str]] [source] ¶. Web in this article let’s see some examples with both of these methods using scala and pyspark languages. Create rdd using sparkcontext.textfile() using textfile() method we can read a text (.txt) file into rdd. Web from pyspark import sparkcontext, sparkconf conf = sparkconf ().setappname (myfirstapp).setmaster (local) sc = sparkcontext (conf=conf) textfile = sc.textfile. Web sparkcontext.textfile(name, minpartitions=none, use_unicode=true) [source] ¶. Read options the following options can be used when reading from log text files… Web pyspark supports reading a csv file with a pipe, comma, tab, space, or any other delimiter/separator files. Pyspark read csv file into dataframe read multiple csv files read all csv files. Parameters namestr directory to the input data files…

9. read json file in pyspark read nested json file in pyspark read

Web how to read data from parquet files? Read multiple text files into a single rdd; Create rdd using sparkcontext.textfile() using textfile() method we can read a text (.txt) file into rdd. Web write a dataframe into a text file and read it back. Web sparkcontext.textfile(name, minpartitions=none, use_unicode=true) [source] ¶.

How To Read An Orc File Using Pyspark Format Spark Performace Tuning

Read all text files from a directory into a single rdd; Read multiple text files into a single rdd; Importing necessary libraries first, we need to import the necessary pyspark libraries. Web how to read data from parquet files? Basically you'd create a new data source that new how to read files.

PySpark Read and Write Parquet File Spark by {Examples}

Web an array of dictionary like data inside json file, which will throw exception when read into pyspark. (added in spark 1.2) for example, if you have the following files… Importing necessary libraries first, we need to import the necessary pyspark libraries. Df = spark.createdataframe( [ (a,), (b,), (c,)], schema=[alphabets]). Web apache spark april 2, 2023 spread the love spark.

PySpark Read JSON file into DataFrame Cooding Dessign

Web in this article let’s see some examples with both of these methods using scala and pyspark languages. Web an array of dictionary like data inside json file, which will throw exception when read into pyspark. Importing necessary libraries first, we need to import the necessary pyspark libraries. Create rdd using sparkcontext.textfile() using textfile() method we can read a text.

PySpark Tutorial 10 PySpark Read Text File PySpark with Python YouTube

Web how to read data from parquet files? Loads text files and returns a sparkdataframe whose schema starts with a string column named value, and followed by partitioned columns if there are any. This article shows you how to read apache common log files. # write a dataframe into a text file. Web when i read it in, and sort.

How to read CSV files using PySpark » Programming Funda

Web a text file for reading and processing. Pyspark out of the box supports reading files in csv, json, and many more file formats into pyspark dataframe. Web sparkcontext.textfile(name, minpartitions=none, use_unicode=true) [source] ¶. Web from pyspark import sparkcontext, sparkconf conf = sparkconf ().setappname (myfirstapp).setmaster (local) sc = sparkcontext (conf=conf) textfile = sc.textfile. 0 if you really want to do this.

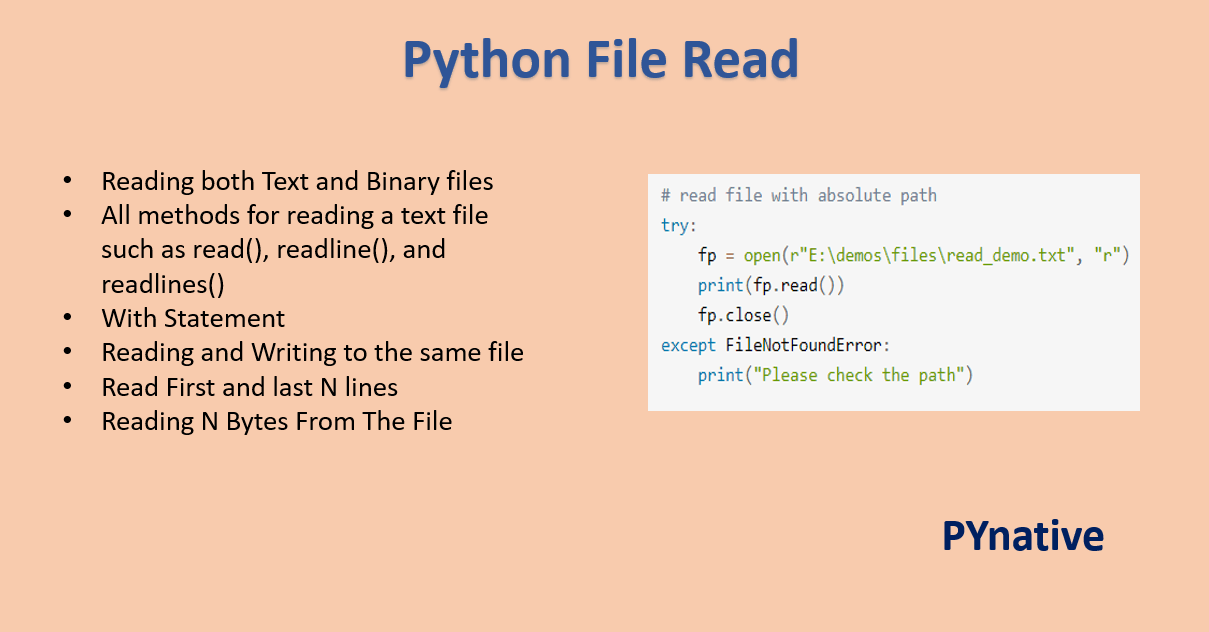

Reading Files in Python PYnative

Loads text files and returns a sparkdataframe whose schema starts with a string column named value, and followed by partitioned columns if there are any. This article shows you how to read apache common log files. Df = spark.createdataframe( [ (a,), (b,), (c,)], schema=[alphabets]). The spark.read () is a method used to read data from various data sources such as.

Read Parquet File In Pyspark Dataframe news room

Web create a sparkdataframe from a text file. To read this file, follow the code below. Read all text files matching a pattern to single rdd; Here's a good youtube video explaining the components you'd need. Basically you'd create a new data source that new how to read files.

Handle Json File Format Using Pyspark Riset

Web when i read it in, and sort into 3 distinct columns, i return this (perfect): The spark.read () is a method used to read data from various data sources such as csv, json, parquet, avro,. Read all text files matching a pattern to single rdd; >>> >>> import tempfile >>> with tempfile.temporarydirectory() as d: The pyspark.sql module is used.

Spark Essentials — How to Read and Write Data With PySpark Reading

Bool = true) → pyspark.rdd.rdd [ tuple [ str, str]] [source] ¶. The pyspark.sql module is used for working with structured data. Web when i read it in, and sort into 3 distinct columns, i return this (perfect): Create rdd using sparkcontext.textfile() using textfile() method we can read a text (.txt) file into rdd. Web 1 answer sorted by:

The Pyspark.sql Module Is Used For Working With Structured Data.

Parameters namestr directory to the input data files… Here's a good youtube video explaining the components you'd need. Pyspark out of the box supports reading files in csv, json, and many more file formats into pyspark dataframe. Web create a sparkdataframe from a text file.

Web A Text File For Reading And Processing.

From pyspark.sql import sparksession from pyspark… To read a parquet file. Read all text files from a directory into a single rdd; Read all text files matching a pattern to single rdd;

Basically You'd Create A New Data Source That New How To Read Files.

Text files, due to its freedom, can contain data in a very convoluted fashion, or might have. This article shows you how to read apache common log files. First, create an rdd by reading a text file. Read multiple text files into a single rdd;

Web 1 Answer Sorted By:

0 if you really want to do this you can write a new data reader that can handle this format natively. The spark.read () is a method used to read data from various data sources such as csv, json, parquet, avro,. Web write a dataframe into a text file and read it back. Create rdd using sparkcontext.textfile() using textfile() method we can read a text (.txt) file into rdd.