Spark Read Delta Table

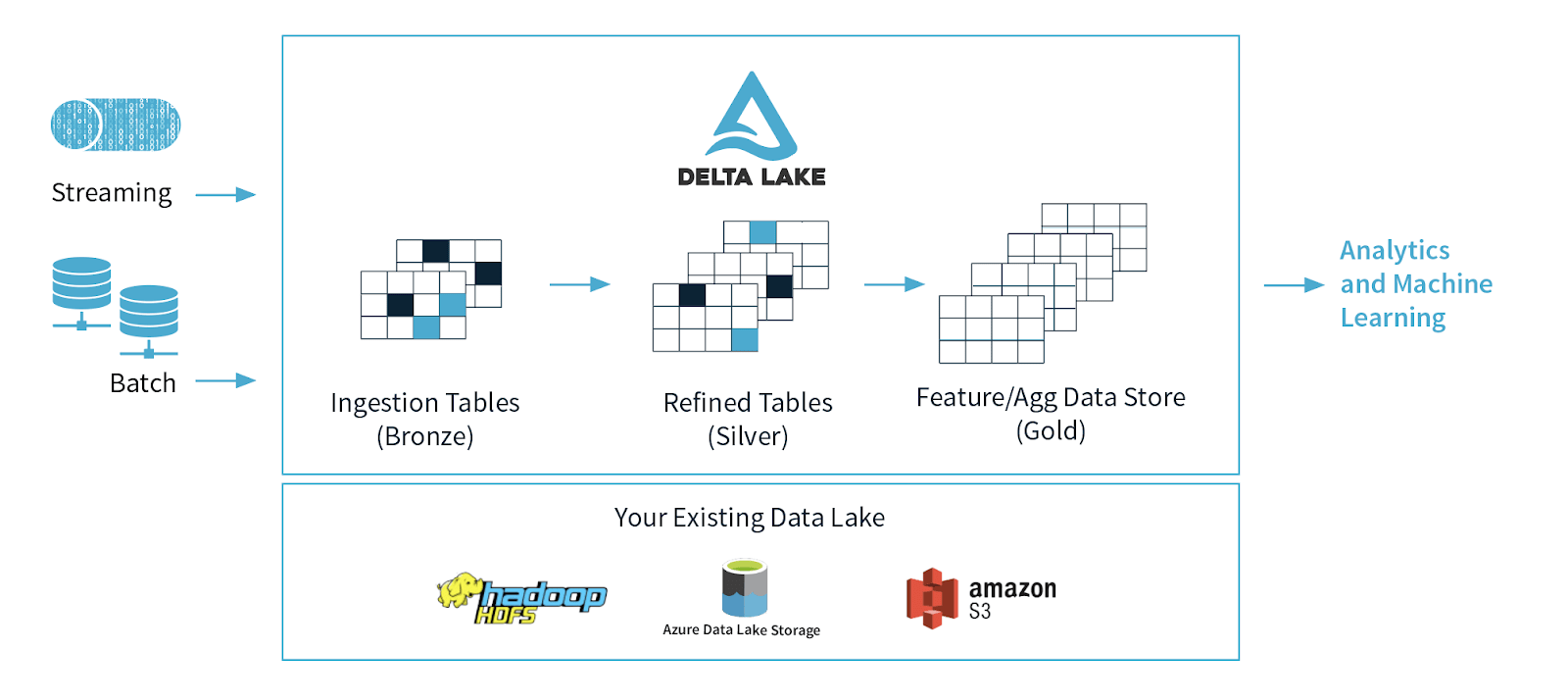

Spark Read Delta Table - Web set up apache spark with delta lake. 28, all flights operate out of a new terminal at kansas city. If the delta lake table is already stored in the catalog (aka. Web delta lake supports most of the options provided by apache spark dataframe read and write apis for performing batch reads. For many delta lake operations, you enable integration with apache spark. Timestampasof will work as a parameter in sparkr::read.df. Web is used a little py spark code to create a delta table in a synapse notebook. # read file(s) in spark data. This tutorial introduces common delta lake operations on azure databricks, including. Web streaming data in a delta table using spark structured streaming | by sudhakar pandhare | globant | medium.

The delta sky club network is deepening its midwest ties on tuesday. Web feb 24, 2023 10:00am. Web to load a delta table into a pyspark dataframe, you can use the spark.read.delta () function. If the delta lake table is already stored in the catalog (aka. Val path = . val partition = year = '2019' val numfilesperpartition = 16 spark.read.format(delta).load(path). Web streaming data in a delta table using spark structured streaming | by sudhakar pandhare | globant | medium. Web the deltasharing keyword is supported for apache spark dataframe read operations, as shown in the following. Web set up apache spark with delta lake. Delta table as stream source, how to do it? Web june 05, 2023.

Web delta tables support a number of utility commands. Web streaming data in a delta table using spark structured streaming | by sudhakar pandhare | globant | medium. Web june 05, 2023. Delta table as stream source, how to do it? Web feb 24, 2023 10:00am. The delta sky club network is deepening its midwest ties on tuesday. # read file(s) in spark data. You choose from over 300 destinations worldwide to find a flight that. Val path = . val partition = year = '2019' val numfilesperpartition = 16 spark.read.format(delta).load(path). Web is used a little py spark code to create a delta table in a synapse notebook.

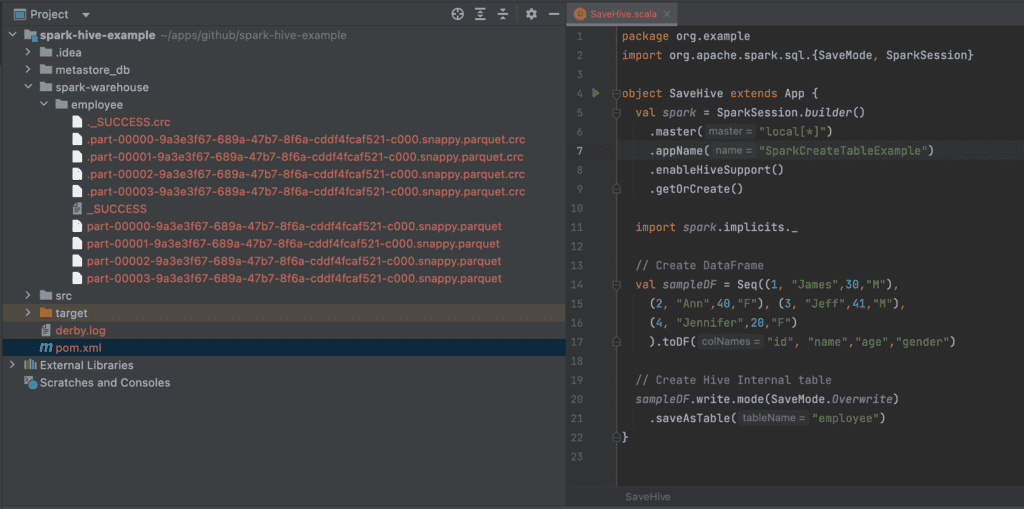

Spark SQL Read Hive Table Spark By {Examples}

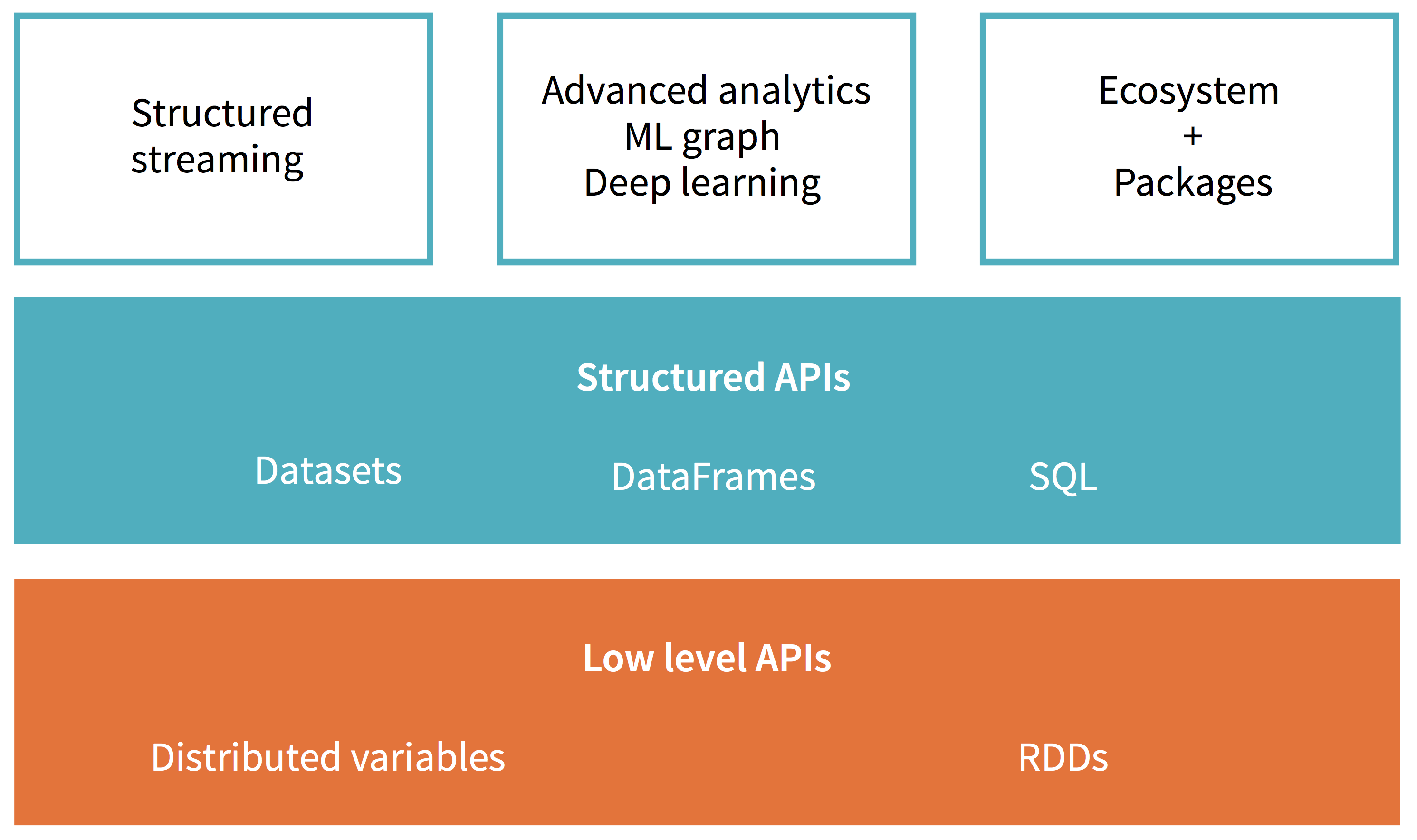

This tutorial introduces common delta lake operations on databricks, including the following: Web set up apache spark with delta lake. Web read from delta lake into a spark dataframe. This tutorial introduces common delta lake operations on azure databricks, including. If the delta lake table is already stored in the catalog (aka.

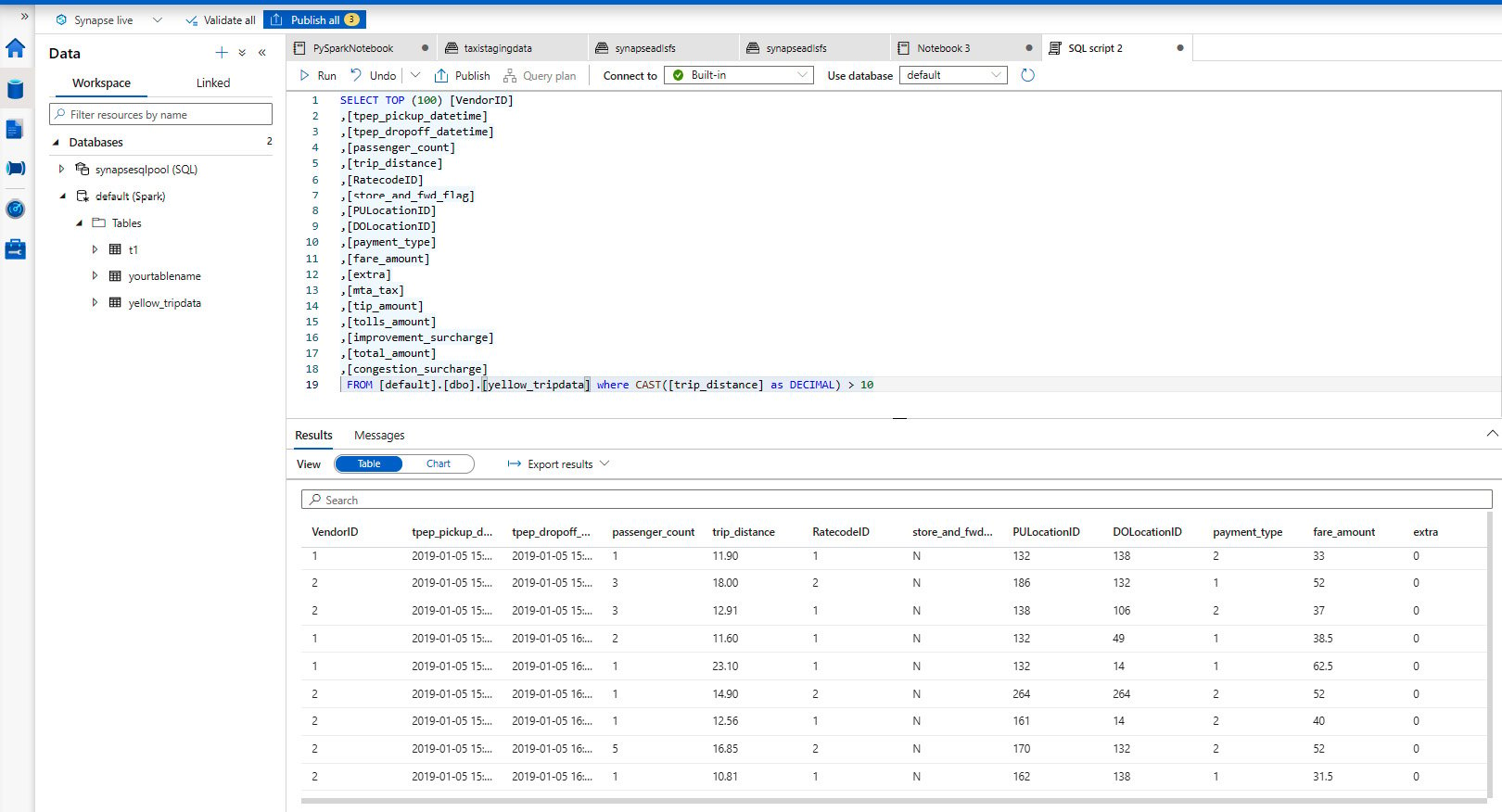

Reading and writing data from ADLS Gen2 using PySpark Azure Synapse

Web the deltasharing keyword is supported for apache spark dataframe read operations, as shown in the following. The delta sky club network is deepening its midwest ties on tuesday. Web to load a delta table into a pyspark dataframe, you can use the spark.read.delta () function. Web kansas city, mo (mci) travel update | delta air lines. If the delta.

Spark Delta Lake Vacuum or Retention in Spark Delta Table with Demo

28, all flights operate out of a new terminal at kansas city. Asked 3 years, 2 months ago. Timestampasof will work as a parameter in sparkr::read.df. This tutorial introduces common delta lake operations on databricks, including the following: Web is used a little py spark code to create a delta table in a synapse notebook.

The Data Engineer's Guide to Apache Spark™ and Delta Lake Databricks

Asked 3 years, 2 months ago. Web kansas city, mo (mci) travel update | delta air lines. Web in python, delta live tables determines whether to update a dataset as a materialized view or streaming table. Web delta lake supports most of the options provided by apache spark dataframe read and write apis for performing batch reads. Delta table as.

Delta Lake in Spark Update, Delete with Spark Delta Table Session

Web read from delta lake into a spark dataframe. Timestampasof will work as a parameter in sparkr::read.df. If the delta lake table is already stored in the catalog (aka. Web delta lake is deeply integrated with spark structured streaming through readstream and writestream. Web delta tables support a number of utility commands.

databricks Creating table with Apache Spark using delta format got

Web in python, delta live tables determines whether to update a dataset as a materialized view or streaming table. The delta sky club network is deepening its midwest ties on tuesday. Web delta lake is deeply integrated with spark structured streaming through readstream and writestream. Web the deltasharing keyword is supported for apache spark dataframe read operations, as shown in.

Azure Databricks Delta ETL Automation

For many delta lake operations, you enable integration with apache spark. Web in python, delta live tables determines whether to update a dataset as a materialized view or streaming table. Web streaming data in a delta table using spark structured streaming | by sudhakar pandhare | globant | medium. The delta sky club network is deepening its midwest ties on.

How Delta Lake 0.7.0 and Apache Spark 3.0 Combine to Support Metatore

Timestampasof will work as a parameter in sparkr::read.df. # read file(s) in spark data. This tutorial introduces common delta lake operations on azure databricks, including. Web delta tables support a number of utility commands. Web set up apache spark with delta lake.

Spark Delta Create Table operation YouTube

You choose from over 300 destinations worldwide to find a flight that. Web june 05, 2023. Web set up apache spark with delta lake. Web read a delta lake table on some file system and return a dataframe. Web is used a little py spark code to create a delta table in a synapse notebook.

Spark Essentials — How to Read and Write Data With PySpark Reading

This tutorial introduces common delta lake operations on databricks, including the following: Web set up apache spark with delta lake. Web feb 24, 2023 10:00am. For many delta lake operations, you enable integration with apache spark. Web delta tables support a number of utility commands.

Web Is Used A Little Py Spark Code To Create A Delta Table In A Synapse Notebook.

Web streaming data in a delta table using spark structured streaming | by sudhakar pandhare | globant | medium. Web to load a delta table into a pyspark dataframe, you can use the spark.read.delta () function. This tutorial introduces common delta lake operations on databricks, including the following: Web the deltasharing keyword is supported for apache spark dataframe read operations, as shown in the following.

You Choose From Over 300 Destinations Worldwide To Find A Flight That.

28, all flights operate out of a new terminal at kansas city. Web feb 24, 2023 10:00am. Web delta lake is deeply integrated with spark structured streaming through readstream and writestream. Web in python, delta live tables determines whether to update a dataset as a materialized view or streaming table.

Asked 3 Years, 2 Months Ago.

Web set up apache spark with delta lake. The delta sky club network is deepening its midwest ties on tuesday. Delta table as stream source, how to do it? Web june 05, 2023.

Val Path = . Val Partition = Year = '2019' Val Numfilesperpartition = 16 Spark.read.format(Delta).Load(Path).

Web read a delta lake table on some file system and return a dataframe. If the delta lake table is already stored in the catalog (aka. Web delta lake supports most of the options provided by apache spark dataframe read and write apis for performing batch reads. This tutorial introduces common delta lake operations on azure databricks, including.