Spark Read From S3

Spark Read From S3 - It will download all hadoop missing packages that will allow you to execute spark jobs with s3… Cloudera components writing data to s3 are constrained by the inherent limitation of amazon s3 known as eventual consistency. Web reading files from s3 using pyspark is a common task for data scientists working with big data. Web 2 answers sorted by: Web the following example illustrates how to read a text file from amazon s3 into an rdd, convert the rdd to a dataframe, and then use the data source api to write the dataframe into a parquet file on amazon s3: This guide has shown you how to set up your aws credentials, initialize pyspark, read a file from s3, and work with the data. Web spark read from & write to parquet file | amazon s3 bucket in this spark tutorial, you will learn what is apache parquet, it’s advantages and how to read the parquet file from amazon s3 bucket into dataframe and write dataframe in parquet file to amazon s3. Web with amazon emr release 5.17.0 and later, you can use s3 select with spark on amazon emr. You are writing a spark job to process large amount of data on s3 with emr, but you might want to first understand the data better or test your spark job. Connect to s3 with unity catalog access s3 buckets using instance profiles access s3 buckets with uris and aws keys access s3.

You are writing a spark job to process large amount of data on s3 with emr, but you might want to first understand the data better or test your spark job. Web june 14, 2023 this article explains how to connect to aws s3 from databricks. Cloudera components writing data to s3 are constrained by the inherent limitation of amazon s3 known as eventual consistency. Read a text file in amazon s3… Web spark sql provides spark.read.csv(path) to read a csv file from amazon s3, local file system, hdfs, and many other data sources into spark dataframe and. Web with amazon emr release 5.17.0 and later, you can use s3 select with spark on amazon emr. To be more specific, perform read and write operations on aws s3 using apache spark. Databricks recommends using unity catalog external locations to connect to s3. Web spark read from & write to parquet file | amazon s3 bucket in this spark tutorial, you will learn what is apache parquet, it’s advantages and how to read the parquet file from amazon s3 bucket into dataframe and write dataframe in parquet file to amazon s3. For more information, see data storage considerations.

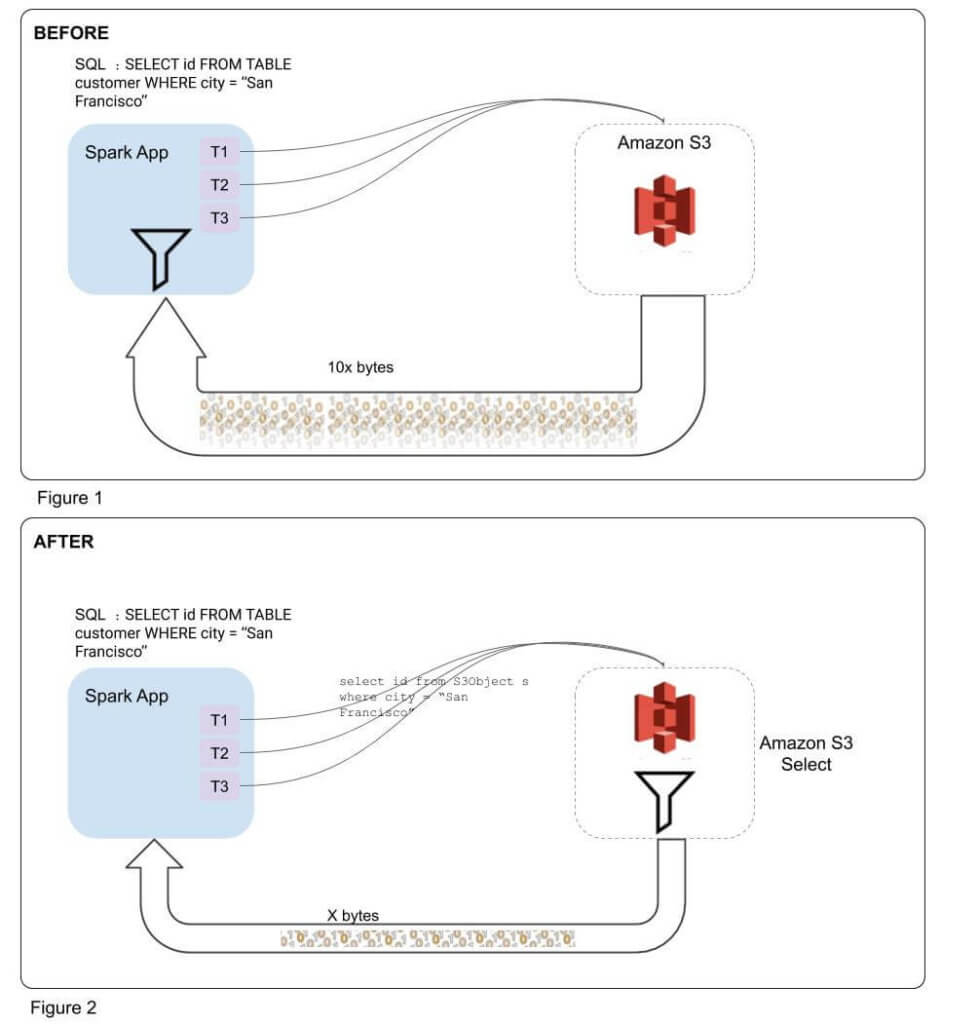

Cloudera components writing data to s3 are constrained by the inherent limitation of amazon s3 known as eventual consistency. You are writing a spark job to process large amount of data on s3 with emr, but you might want to first understand the data better or test your spark job. S3 select allows applications to retrieve only a subset of data from an object. I want to read all parquet files from an s3 bucket, including all those in the subdirectories (these are actually prefixes). To be more specific, perform read and write operations on aws s3 using apache spark. Web spark read from & write to parquet file | amazon s3 bucket in this spark tutorial, you will learn what is apache parquet, it’s advantages and how to read the parquet file from amazon s3 bucket into dataframe and write dataframe in parquet file to amazon s3. Databricks recommends using unity catalog external locations to connect to s3. Web when spark is running in a cloud infrastructure, the credentials are usually automatically set up. It will download all hadoop missing packages that will allow you to execute spark jobs with s3… S3 select can improve query performance for csv and json files in some applications by pushing down processing to amazon s3.

Improving Apache Spark Performance with S3 Select Integration Qubole

S3 select allows applications to retrieve only a subset of data from an object. Web 2 answers sorted by: Web spark read from & write to parquet file | amazon s3 bucket in this spark tutorial, you will learn what is apache parquet, it’s advantages and how to read the parquet file from amazon s3 bucket into dataframe and write.

Spark Read Json From Amazon S3 Spark By {Examples}

S3 select allows applications to retrieve only a subset of data from an object. To be more specific, perform read and write operations on aws s3 using apache spark. Cloudera components writing data to s3 are constrained by the inherent limitation of amazon s3 known as eventual consistency. I have wanted to switch from watching the anime to reading the.

PySpark Tutorial24 How Spark read and writes the data on AWS S3

Databricks recommends using unity catalog external locations to connect to s3. Web 2 answers sorted by: To be more specific, perform read and write operations on aws s3 using apache spark. Cloudera components writing data to s3 are constrained by the inherent limitation of amazon s3 known as eventual consistency. Web june 14, 2023 this article explains how to connect.

Read and write data in S3 with Spark Gigahex Open Source Data

Web june 14, 2023 this article explains how to connect to aws s3 from databricks. Web you can read and write spark sql dataframes using the data source api. Web the objective of this article is to build an understanding of basic read and write operations on amazon web storage service s3. Web reading files from s3 using pyspark is.

Spark Read and Write Apache Parquet Spark By {Examples}

Web spark sql provides spark.read.csv(path) to read a csv file from amazon s3, local file system, hdfs, and many other data sources into spark dataframe and. It will download all hadoop missing packages that will allow you to execute spark jobs with s3… I want to read all parquet files from an s3 bucket, including all those in the subdirectories.

Spark Architecture Apache Spark Tutorial LearntoSpark

Web with amazon emr release 5.17.0 and later, you can use s3 select with spark on amazon emr. I have wanted to switch from watching the anime to reading the manga and i would like to know what chapter season 3 starts in the manga. Web 2 answers sorted by: For more information, see data storage considerations. Web reading files.

One Stop for all Spark Examples — Write & Read CSV file from S3 into

Web the objective of this article is to build an understanding of basic read and write operations on amazon web storage service s3. Specifying credentials to access s3 from spark Web reading data from s3 subdirectories in pyspark. S3 select can improve query performance for csv and json files in some applications by pushing down processing to amazon s3. S3.

Spark에서 S3 데이터 읽어오기 내가 다시 보려고 만든 블로그

For more information, see data storage considerations. Web spark read from & write to parquet file | amazon s3 bucket in this spark tutorial, you will learn what is apache parquet, it’s advantages and how to read the parquet file from amazon s3 bucket into dataframe and write dataframe in parquet file to amazon s3. Databricks recommends using unity catalog.

Spark SQL Architecture Sql, Spark, Apache spark

S3 select allows applications to retrieve only a subset of data from an object. Cloudera components writing data to s3 are constrained by the inherent limitation of amazon s3 known as eventual consistency. S3 select can improve query performance for csv and json files in some applications by pushing down processing to amazon s3. Web with amazon emr release 5.17.0.

Tecno Spark 3 Pro Review Raising the bar for Affordable midrange

Web the following example illustrates how to read a text file from amazon s3 into an rdd, convert the rdd to a dataframe, and then use the data source api to write the dataframe into a parquet file on amazon s3: Web with amazon emr release 5.17.0 and later, you can use s3 select with spark on amazon emr. Web.

Web Reading Files From S3 Using Pyspark Is A Common Task For Data Scientists Working With Big Data.

S3 select allows applications to retrieve only a subset of data from an object. If you're talking about those 2 episodes that netflix thinks is s3… Web reading files from s3 with spark locally. Web reading data from s3 subdirectories in pyspark.

Web When Spark Is Running In A Cloud Infrastructure, The Credentials Are Usually Automatically Set Up.

Web 2 answers sorted by: Connect to s3 with unity catalog access s3 buckets using instance profiles access s3 buckets with uris and aws keys access s3. Web amazon emr offers features to help optimize performance when using spark to query, read and write data saved in amazon s3. I want to read all parquet files from an s3 bucket, including all those in the subdirectories (these are actually prefixes).

It Will Download All Hadoop Missing Packages That Will Allow You To Execute Spark Jobs With S3…

Web the following example illustrates how to read a text file from amazon s3 into an rdd, convert the rdd to a dataframe, and then use the data source api to write the dataframe into a parquet file on amazon s3: Web the objective of this article is to build an understanding of basic read and write operations on amazon web storage service s3. With these steps, you should be able to read any file from s3. Web you can read and write spark sql dataframes using the data source api.

Web What Chapter Of The Manga Is Season 3.

I have wanted to switch from watching the anime to reading the manga and i would like to know what chapter season 3 starts in the manga. Web 2 answers sorted by: Cloudera components writing data to s3 are constrained by the inherent limitation of amazon s3 known as eventual consistency. You are writing a spark job to process large amount of data on s3 with emr, but you might want to first understand the data better or test your spark job.